|

Joochan Kim I'm a research intern at KIST in Seoul, mentored by Hwasup Lim and Taekgeun You, where I am working on Embodied AI, mostly focusing on Vision-Language-Action models. I finished my master's degree at SNU, where I was advised by Byoung-Tak Zhang. During my master course, I did internship at A*STAR mentored by Haiyue Zhu. Also, I did my bachelor's degree at Yonsei University. |

|

I am actively looking for a Ph.D. position. If anyone intersted, Please contact me by email or LinkedIn!

ResearchI'm interested in multimodal AI, generative AI, and data-center AI. Most of my research is about understanding video and images with language guidance, thereby developing embodied AI from internet AI. Some papers with highlight are papers with main contributions. |

|

The Losing Winner: An LLM Agent that Predicts the Market but Loses Money

Youwon Jang*, Joochan Kim*, Byoung-Tak Zhang NeurIPS Workshop on Generative AI in Finance, 2025 paper / poster Fine-tuning an LLM for Bitcoin market state prediction improves accuracy but paradoxically worsens trading returns, exposing the dangers of proxy objectives and reward hacking in financial AI. |

|

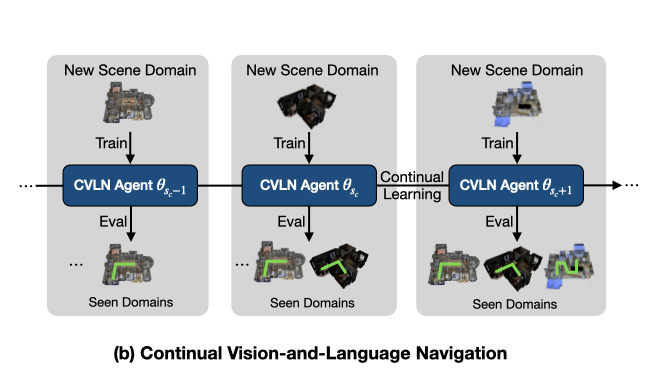

Continual Vision-and-Language Navigation

Seongjun Jeong, Gi-Cheon Kang, Seongho Choi, Joochan Kim, Byoung-Tak Zhang BMVC, 2025 paper We propose Continual Vision-and-Language Navigation (CVLN) paradigm along with two methods for CVLN: Perplexity Replay (PerpR) and Episodic Self-Replay (ESR). |

|

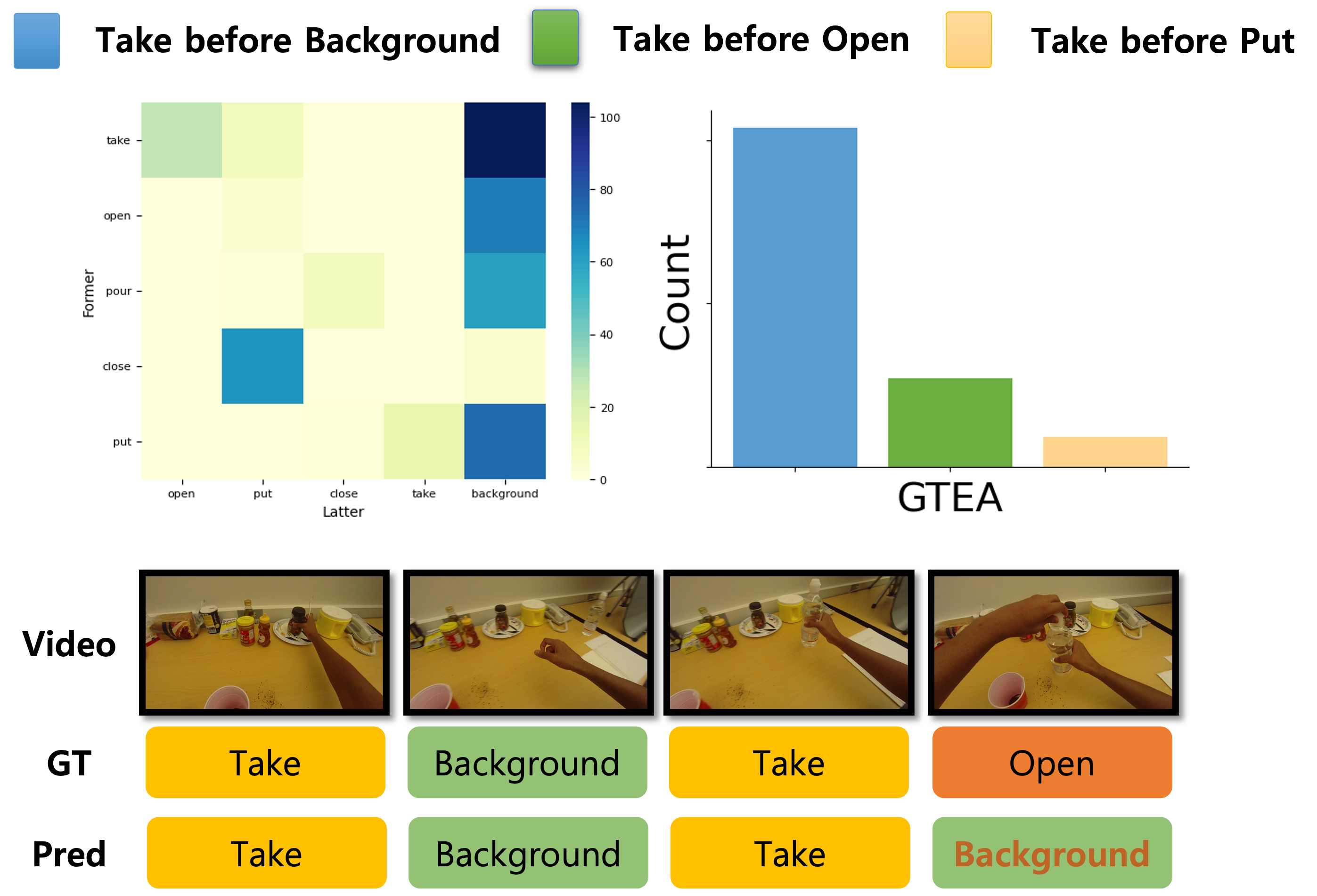

Exploring Ordinal Bias in Action Recognition for Instructional Videos

Joochan Kim, Minjoon Jung, Byoung-Tak Zhang ICLR Workshop on Spurious Correlation and Shortcut Learning: Foundations and Solutions, 2025 paper / poster Ordinal bias leads action recognition models to over-rely on dominant action pairs, inflating performance and lacking true video comprehension even when challenged by action masking and sequence shuffling. |

|

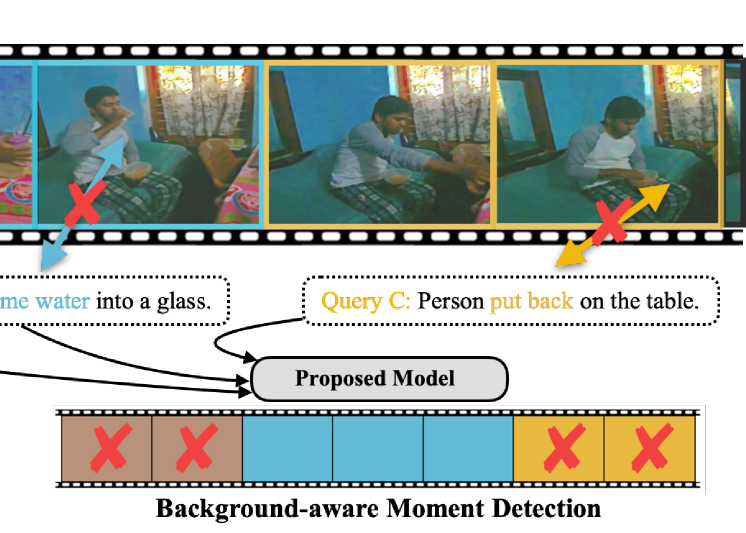

Background-aware Moment Detection for Video Moment Retrieval

Minjoon Jung, Youwon Jang, Seongho Choi, Joochan Kim, Jin-Hwa Kim, Byoung-Tak Zhang WACV, 2025 paper / code We propose Background-aware Moment Detection TRansformer (BM-DETR), which carefully adopts a contrastive approach for robust prediction. BM-DETR achieves state-of-the-art performance on various benchmarks while being highly efficient. |

|

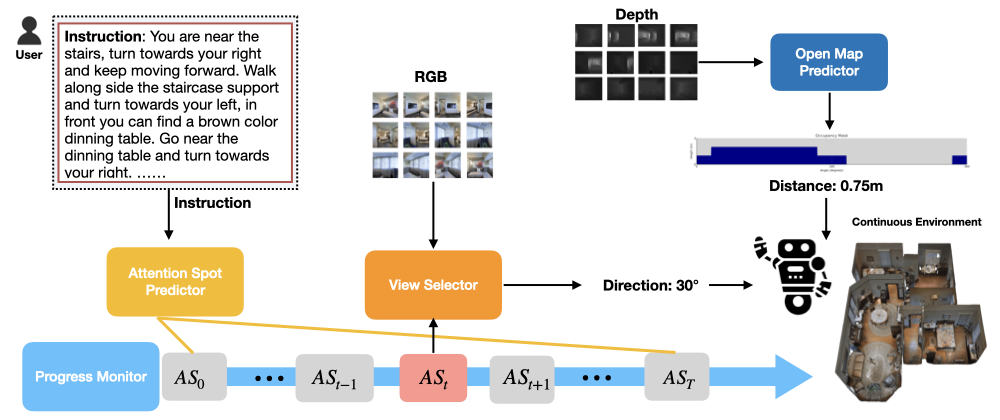

Zero-Shot Vision-and-Language Navigation with Collision Mitigation in Continuous Environment

Seongjun Jeong, Gi-Cheon Kang, Joochan Kim, Byoung-Tak Zhang CVPR Workshop on Embodied AI, 2025 paper We propose the zero-shot Vision-and-Language Navigation with Collision Mitigation (VLN-CM), which takes low-level actions as an output while considering possible collisions. |

|

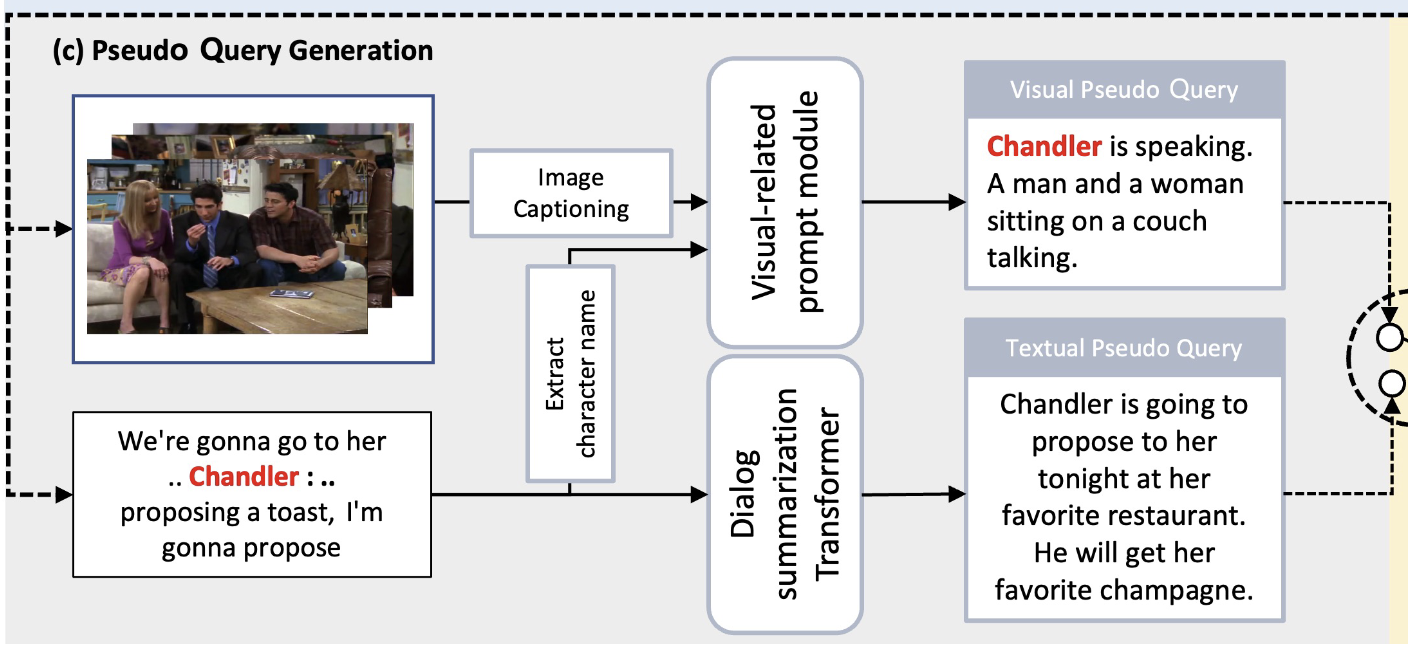

Modal-specific Pseudo Query Generation for Video Corpus Moment Retrieval

Minjoon Jung, Seongho Choi, Joochan Kim, Jin-Hwa Kim, Byoung-Tak Zhang EMNLP, 2022 paper We propose a self-supervised learning framework: Modal-specific Pseudo Query Generation Network (MPGN). First, MPGN selects candidate temporal moments via subtitle-based moment sampling.Then, it generates pseudo queries exploiting both visual and textual information from the selected temporal moments. |

Miscellanea |

Academic Service |

Reviewer, NeurIPS 2025 |

Teaching |

Teaching Assistant, M1522.000300 Spring 2023 |

|

Feel free to steal this website's source code. Do not scrape the HTML from this page itself, as it includes analytics tags that you do not want on your own website — use the github code instead. Also, consider using Leonid Keselman's Jekyll fork of this page. |